As always, Survic raised excellent Questions and gave excellent suggestions about my previous post. Survic comments/questions are in bold.

“Business Object Manager” (I guess it is the “Façade Object” -- it is very popular to use "XX_Manager" and "XX_Facade" interchangeably)Business Object Manager is a Mediator between Business/Entity Object and Data Object Layer. It provide an opportunity to aggregate the business objects on Database server and send aggregate object at once to Application Server. This particular element is missing in Jeffery’s Architecture (I could be wrong) or in Pet Shop Architecture (I am very sure because I am burnt by it).

It also enables Business Object Layer and Business Transfer Object to one layer instead of being multiple layers as shown in the code.

Process Object” (I guess it is the “Workflow Object”)

Yes, Survic is right.

All the Workflow logic, additional processing, co-ordination and control logic will go there.

Repository Object” (I’m pretty sure it is the “Façade Object”).

In my case, it is responsibility of Gateway/Factory Layer. I may take out of Architecture diagram.

Application architectures” and “service architectures” are critically different?No. There are subtle but major Design differences.

SOA = Good Distributed Programming Practices + Message Version Control + More Loose Coupling

In Distributed Architecture/Client Server Architecture, generally you may have control over client applications. In my internal application architecture, I can make changes to Business Object layer and regenerate the proxy object and recompile the Gateway/Factory Layer and make changes to UI. So there is little tight coupling between server side component and consumer application. Basically I am sharing code with Consumer application. Performance is big concern. It is more RPC style of communication

Distributed computing is also more end-to-end integration. Component A connects to Component B and component B connects to Component C

In Service Oriented Architecture, you don't have control over client applications or other consumer Services. in general. You have to be more subtle over version control. You share contract and chances are that your application may support multiple contracts.In that scenario, I design the Incoming message as XML string and share XML Schema with client. That way Interface of Service always remain same (no need to regenrate WSDL )but you message structure may change. It is more Document Style of communiction.

SOA is more like workflow. Component A may connect to Componet B or may be Component C.

Here is nice article by Rockford Lhotka

However I think that if you have good Middle Layer, adding a true service layer should be a small issue.

Microsoft Architectue

Do they have some implementation code? My interpretation is same like you. It is decent architecture if we both are right. Last time , I used pet shot .net architecture, it left a very bad taste in mouth. :)

Tuesday, July 25, 2006

Friday, July 21, 2006

SOA Friendly Architecture version of Jeffrey Palermo's Architecture -- Architecture

Please Note:

This post is inspired by Jeffrey Palermo's post on Application's Architecture

Though it is quite superior as compared to .Net Petshop Architecture, I really doubt that this architecture will scale for distributed computed scenario like petshop architecture does not. Jeffrey may have answer for this. So I came up this SOA friendly architecture which is being used at one of my client's place. I am also going include some feature from Jeffrey'a Architecture like Presentation layer (MVP vs MVC) in my architecture

Note: The author of this blog does not believe that you should use WebSevice in standalone application when none required. But we live in a world where we don't make rules. If you database is protected by another firewall, you may find this architecture handy. It is more important to have logically separation in architecture that will scale well if application is deployed in a distributed manner.

Here is SOA friendly Architecture

Here is deployment view

Major Components

1. UI Components/Views:

Provide User Interface

Controllers

Get the Business Objects from Gateway and provides to UI

Gateway/Factory

It is factory layer that provides the business object. It knows how to create or get the object across the network boundaries. First looks in repository if none, gets the object across the wire.Our example is going to exploit the capabilities of Web Service. It will convert the proxy object into real domain object using Mapper utility.

References:

http://www.martinfowler.com/eaaCatalog/gateway.html

http://www.martinfowler.com/eaaCatalog/repository.html

http://www.martinfowler.com/eaaCatalog/dataTransferObject.html

Business Object Manager

It populates the business object using data object. This is where one can aggregate the business objects before transferred across the network.

Business Object/Entity Object

Contains the Attributes and operations related to domain entity

Process Object/Workflow Object

Business objects collaborate together to perform some useful operations to users.

Data Object

Data Objects retrieve the data from Database. Will contain all the CRUD operations.

Here is Code.

Gateway

namespace Gateway

{

public class Contact

{

static Contact()

{

//

}

private static List contactListCache = null;

private static Object syncRoot = new Object();

public static void ClearCache()

{

lock (syncRoot)

{

contactListCache = null;

}

}

public List GetContact()

{

return ContactList;

}

public List ContactList

{

get

{

if (contactListCache == null)

{

lock (syncRoot)

{

contactListCache = new List();

ContactWS.ContactService ws = new ContactWS.ContactService();

ContactWS.Contact[] proxyContactList = ws.GetContacts();

contactListCache = (List)Mapper.MapProperties_Fields((System.Collections.IList)proxyContactList, typeof(List), typeof(BusinessObject.Contact));

}

}

return (contactListCache);

}

}

}

}

namespace Gateway

{

public abstract class Mapper

{

public static object MapProperties_Fields(object SourceObj, Type DestinationType)

{

if (SourceObj == null)

{

return null;

}

Type sourceType = SourceObj.GetType();

object destinationObj = Activator.CreateInstance(DestinationType);

foreach (PropertyInfo sourceProperty in sourceType.GetProperties())

{

MemberInfo destinationMember = DestinationType.GetMember(sourceProperty.Name)[0];

if (destinationMember.MemberType == MemberTypes.Property)

{

PropertyInfo destinationProperty = ((PropertyInfo)(destinationMember));

if ((destinationProperty.CanWrite == true))

{

if (destinationProperty.PropertyType.Equals(sourceProperty.PropertyType))

{

destinationProperty.SetValue(destinationObj, sourceProperty.GetValue(SourceObj, null), null);

}

}

}

}

return destinationObj;

}

public static IList MapProperties_Fields(IList SourceList, Type DestinationListType, Type DestinationElementType)

{

if (SourceList == null)

{

return null;

}

IList destinationList = ((IList)(Activator.CreateInstance(DestinationListType)));

foreach (object sourceObj in SourceList)

{

destinationList.Add(MapProperties_Fields(sourceObj, DestinationElementType));

}

return destinationList;

}

}

}

WebService

namespace BusinessObjectWebservice

{

///

/// Summary description for Service1

///

[WebService(Namespace = "http://tempuri.org/")]

[WebServiceBinding(ConformsTo = WsiProfiles.BasicProfile1_1)]

[ToolboxItem(false)]

public class Service1 : System.Web.Services.WebService

{

[WebMethod]

public List GetContacts()

{

BusinessObjectManager.Contact contactManager = new BusinessObjectManager.Contact();

return(contactManager.GetContacts());

}

}

}

Business Object Manager

namespace BusinessObjectManager

{

public class Contact

{

public List GetContacts()

{

DataTable dataTable;

List list = new List();

dataTable = DataObject.Contact.GetContact();

try

{

foreach (DataRow dataRow in dataTable.Rows)

{

BusinessObject.Contact contact = new BusinessObject.Contact(dataRow[0].ToString(), dataRow[1].ToString(), dataRow[2].ToString(), Int32.Parse(dataRow[3].ToString()));

list.Add(contact);

}

}

catch (Exception ex)

{

throw ex;

}

finally

{

dataTable = null;

}

return(list);

}

}

}

Business Object

namespace BusinessObject

{

public class Contact

{

private String _userName;

private String _name;

private String _phoneNumber;

private int _age;

public Contact()

{

}

public Contact(string userName, string name,String phoneNumber, int age)

{

_userName = userName;

_name = name;

_phoneNumber = phoneNumber;

_age = age;

}

public String UserName

{

get

{

return _userName;

}

set

{

_userName = value;

}

}

public String Name

{

get

{

return _name;

}

set

{

_name = value;

}

}

public String PhoneNumber

{

get

{

return _phoneNumber;

}

set

{

_phoneNumber = value;

}

}

public int Age

{

get

{

return _age;

}

set

{

_age = value;

}

}

}

}

Data Object

namespace DataObject

{

public class Contact

{

private const String ContactTableName = "Contact";

private class Fields

{

public const string UserName = "ContactUser_Name";

public const string Name = "Contact_Name";

public const string PhoneNumber = "Contact_Phone_Number";

public const string Age = "Contact_Age";

}

public static DataTable GetContact()

{

System.Text.StringBuilder sql = new System.Text.StringBuilder();

System.Data.DataTable dataTable;

//Build the SQL

AppendBaseSelectColumns(sql);

sql.Append(" FROM");

sql.Append( " " + ContactTableName);

try

{

dataTable = GetDataTable(sql.ToString());

}

catch (Exception ex)

{

//Log the error

throw ex;

}

return(dataTable);

}

private static String AppendBaseSelectColumns(System.Text.StringBuilder sql)

{

sql.Append("SELECT");

sql.Append(" " + Fields.UserName + ",");

sql.Append(" " + Fields.Name + ",");

sql.Append(" " + Fields.PhoneNumber + ",");

sql.Append(" " + Fields.Age );

return sql.ToString();

}

private static DataTable GetDataTable(string sql)

{

//Real life one will connect to Database and return the

DataTable dataTable = new DataTable();

dataTable.Columns.Add(new DataColumn("Contact_User_Name", typeof(String)));

dataTable.Columns.Add(new DataColumn("Contact_Name", typeof(String)));

dataTable.Columns.Add(new DataColumn("Contact_Phone_Number", typeof(String)));

dataTable.Columns.Add(new DataColumn("Contact_Age", typeof(System.Int32)));

dataTable.Rows.Add(new Object[]{ "VK", "Vikas","222-222-2222",int.MaxValue});

dataTable.Rows.Add(new Object[] { "SV", "Survic", "222-222-9999", int.MaxValue });

return dataTable;

}

}

}

Presenter/Controller

namespace Presenter

{

public class ContactPresenter

{

public ContactPresenter(View.IContactView view)

{

_view = view;

_view.LookUp += new EventHandler(_view_LookUp);

_view.Persist += new EventHandler(_view_Persist);

}

private View.IContactView _view;

private BusinessObject.Contact _currentContact;

public View.IContactView View

{

get { return _view; }

}

public BusinessObject.Contact CurrentContact

{

get { return _currentContact; }

}

public void SaveContact()

{

if (_currentContact != null)

{

_currentContact.Name = _view.ContactName;

_currentContact.PhoneNumber = _view.ContactPhoneNumber;

_currentContact.Age = _view.ContactAge;

}

}

public void LookUpContactByUserName(string userName)

{

Gateway.Contact contactGateway = new Gateway.Contact();

List contactList = contactGateway.GetContact();

foreach (BusinessObject.Contact contact in contactList)

{

if (contact.UserName.ToUpper().Trim().Equals(userName.ToUpper().Trim()))

{

_currentContact = contact;

break;

}

}

_view.ContactUserName = _currentContact.UserName;

_view.ContactName = _currentContact.Name;

_view.ContactPhoneNumber = _currentContact.PhoneNumber;

_view.ContactAge = _currentContact.Age;

}

private void _view_LookUp(object sender, EventArgs e)

{

this.LookUpContactByUserName(_view.UserNameToLookUp);

}

private void _view_Persist(object sender, EventArgs e)

{

this.SaveContact();

}

}

}

I am retiring this implmentation with a new implmentation using WCF, though the

Architecture remains same.

http://vikasnetdev.blogspot.com/2006/12/my-default-soa-architecture-using-wcf.html

This post is inspired by Jeffrey Palermo's post on Application's Architecture

Though it is quite superior as compared to .Net Petshop Architecture, I really doubt that this architecture will scale for distributed computed scenario like petshop architecture does not. Jeffrey may have answer for this. So I came up this SOA friendly architecture which is being used at one of my client's place. I am also going include some feature from Jeffrey'a Architecture like Presentation layer (MVP vs MVC) in my architecture

Note: The author of this blog does not believe that you should use WebSevice in standalone application when none required. But we live in a world where we don't make rules. If you database is protected by another firewall, you may find this architecture handy. It is more important to have logically separation in architecture that will scale well if application is deployed in a distributed manner.

Here is SOA friendly Architecture

Here is deployment view

Major Components

1. UI Components/Views:

Provide User Interface

Controllers

Get the Business Objects from Gateway and provides to UI

Gateway/Factory

It is factory layer that provides the business object. It knows how to create or get the object across the network boundaries. First looks in repository if none, gets the object across the wire.Our example is going to exploit the capabilities of Web Service. It will convert the proxy object into real domain object using Mapper utility.

References:

http://www.martinfowler.com/eaaCatalog/gateway.html

http://www.martinfowler.com/eaaCatalog/repository.html

http://www.martinfowler.com/eaaCatalog/dataTransferObject.html

Business Object Manager

It populates the business object using data object. This is where one can aggregate the business objects before transferred across the network.

Business Object/Entity Object

Contains the Attributes and operations related to domain entity

Process Object/Workflow Object

Business objects collaborate together to perform some useful operations to users.

Data Object

Data Objects retrieve the data from Database. Will contain all the CRUD operations.

Here is Code.

Gateway

namespace Gateway

{

public class Contact

{

static Contact()

{

//

}

private static List

private static Object syncRoot = new Object();

public static void ClearCache()

{

lock (syncRoot)

{

contactListCache = null;

}

}

public List

{

return ContactList;

}

public List

{

get

{

if (contactListCache == null)

{

lock (syncRoot)

{

contactListCache = new List

ContactWS.ContactService ws = new ContactWS.ContactService();

ContactWS.Contact[] proxyContactList = ws.GetContacts();

contactListCache = (List

}

}

return (contactListCache);

}

}

}

}

namespace Gateway

{

public abstract class Mapper

{

public static object MapProperties_Fields(object SourceObj, Type DestinationType)

{

if (SourceObj == null)

{

return null;

}

Type sourceType = SourceObj.GetType();

object destinationObj = Activator.CreateInstance(DestinationType);

foreach (PropertyInfo sourceProperty in sourceType.GetProperties())

{

MemberInfo destinationMember = DestinationType.GetMember(sourceProperty.Name)[0];

if (destinationMember.MemberType == MemberTypes.Property)

{

PropertyInfo destinationProperty = ((PropertyInfo)(destinationMember));

if ((destinationProperty.CanWrite == true))

{

if (destinationProperty.PropertyType.Equals(sourceProperty.PropertyType))

{

destinationProperty.SetValue(destinationObj, sourceProperty.GetValue(SourceObj, null), null);

}

}

}

}

return destinationObj;

}

public static IList MapProperties_Fields(IList SourceList, Type DestinationListType, Type DestinationElementType)

{

if (SourceList == null)

{

return null;

}

IList destinationList = ((IList)(Activator.CreateInstance(DestinationListType)));

foreach (object sourceObj in SourceList)

{

destinationList.Add(MapProperties_Fields(sourceObj, DestinationElementType));

}

return destinationList;

}

}

}

WebService

namespace BusinessObjectWebservice

{

///

/// Summary description for Service1

///

[WebService(Namespace = "http://tempuri.org/")]

[WebServiceBinding(ConformsTo = WsiProfiles.BasicProfile1_1)]

[ToolboxItem(false)]

public class Service1 : System.Web.Services.WebService

{

[WebMethod]

public List

{

BusinessObjectManager.Contact contactManager = new BusinessObjectManager.Contact();

return(contactManager.GetContacts());

}

}

}

Business Object Manager

namespace BusinessObjectManager

{

public class Contact

{

public List

{

DataTable dataTable;

List

dataTable = DataObject.Contact.GetContact();

try

{

foreach (DataRow dataRow in dataTable.Rows)

{

BusinessObject.Contact contact = new BusinessObject.Contact(dataRow[0].ToString(), dataRow[1].ToString(), dataRow[2].ToString(), Int32.Parse(dataRow[3].ToString()));

list.Add(contact);

}

}

catch (Exception ex)

{

throw ex;

}

finally

{

dataTable = null;

}

return(list);

}

}

}

Business Object

namespace BusinessObject

{

public class Contact

{

private String _userName;

private String _name;

private String _phoneNumber;

private int _age;

public Contact()

{

}

public Contact(string userName, string name,String phoneNumber, int age)

{

_userName = userName;

_name = name;

_phoneNumber = phoneNumber;

_age = age;

}

public String UserName

{

get

{

return _userName;

}

set

{

_userName = value;

}

}

public String Name

{

get

{

return _name;

}

set

{

_name = value;

}

}

public String PhoneNumber

{

get

{

return _phoneNumber;

}

set

{

_phoneNumber = value;

}

}

public int Age

{

get

{

return _age;

}

set

{

_age = value;

}

}

}

}

Data Object

namespace DataObject

{

public class Contact

{

private const String ContactTableName = "Contact";

private class Fields

{

public const string UserName = "ContactUser_Name";

public const string Name = "Contact_Name";

public const string PhoneNumber = "Contact_Phone_Number";

public const string Age = "Contact_Age";

}

public static DataTable GetContact()

{

System.Text.StringBuilder sql = new System.Text.StringBuilder();

System.Data.DataTable dataTable;

//Build the SQL

AppendBaseSelectColumns(sql);

sql.Append(" FROM");

sql.Append( " " + ContactTableName);

try

{

dataTable = GetDataTable(sql.ToString());

}

catch (Exception ex)

{

//Log the error

throw ex;

}

return(dataTable);

}

private static String AppendBaseSelectColumns(System.Text.StringBuilder sql)

{

sql.Append("SELECT");

sql.Append(" " + Fields.UserName + ",");

sql.Append(" " + Fields.Name + ",");

sql.Append(" " + Fields.PhoneNumber + ",");

sql.Append(" " + Fields.Age );

return sql.ToString();

}

private static DataTable GetDataTable(string sql)

{

//Real life one will connect to Database and return the

DataTable dataTable = new DataTable();

dataTable.Columns.Add(new DataColumn("Contact_User_Name", typeof(String)));

dataTable.Columns.Add(new DataColumn("Contact_Name", typeof(String)));

dataTable.Columns.Add(new DataColumn("Contact_Phone_Number", typeof(String)));

dataTable.Columns.Add(new DataColumn("Contact_Age", typeof(System.Int32)));

dataTable.Rows.Add(new Object[]{ "VK", "Vikas","222-222-2222",int.MaxValue});

dataTable.Rows.Add(new Object[] { "SV", "Survic", "222-222-9999", int.MaxValue });

return dataTable;

}

}

}

Presenter/Controller

namespace Presenter

{

public class ContactPresenter

{

public ContactPresenter(View.IContactView view)

{

_view = view;

_view.LookUp += new EventHandler(_view_LookUp);

_view.Persist += new EventHandler(_view_Persist);

}

private View.IContactView _view;

private BusinessObject.Contact _currentContact;

public View.IContactView View

{

get { return _view; }

}

public BusinessObject.Contact CurrentContact

{

get { return _currentContact; }

}

public void SaveContact()

{

if (_currentContact != null)

{

_currentContact.Name = _view.ContactName;

_currentContact.PhoneNumber = _view.ContactPhoneNumber;

_currentContact.Age = _view.ContactAge;

}

}

public void LookUpContactByUserName(string userName)

{

Gateway.Contact contactGateway = new Gateway.Contact();

List

foreach (BusinessObject.Contact contact in contactList)

{

if (contact.UserName.ToUpper().Trim().Equals(userName.ToUpper().Trim()))

{

_currentContact = contact;

break;

}

}

_view.ContactUserName = _currentContact.UserName;

_view.ContactName = _currentContact.Name;

_view.ContactPhoneNumber = _currentContact.PhoneNumber;

_view.ContactAge = _currentContact.Age;

}

private void _view_LookUp(object sender, EventArgs e)

{

this.LookUpContactByUserName(_view.UserNameToLookUp);

}

private void _view_Persist(object sender, EventArgs e)

{

this.SaveContact();

}

}

}

Converting NUnit Tests to MSTest

I have been deliberating for some time whether to stick with NUnit or go for MSTest.

http://vikasnetdev.blogspot.com/2006/07/eight-point-checks-for-servicing.html

Advantages of MSTest over NUnit

1. Excellent IDE Integration

2. Code Generation

3. New Attributes

4. Enhancements to the Assert class

5. Built-in Support for testing non-public memembers.

After hearing from the colleague that debugging NUnit tests is not working great with Visual Studio 2005, I decided to plunge into MSTest. First step is figure out how move previous NUnit tests to MSTest. Here are steps

Step 1: Start with James NewKirk’s blog post

Step 2: Download the NUnit Converter V1.0 RC1 from workspace

Step 3: Install the Guidance Automation Extensions & Toolkits from link

Step 4:Double-click the file: “NUnit Converter.msi” to install the converter. One caveat: It has not been tested in any other directory than the default directory. This of course does not mean it will not work in a different directory it just means that it might not. Also the rest of the instructions assume you have put it in the default directory. You can think of this as a strong hint to leave it in the default directory.

Step 5: Start Visual Studio 2005 Team System.

Step 6: Open a solution file that has existing NUnit test code.

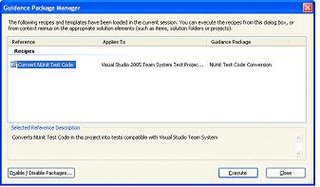

On the Tools menu, click Guidance Package Manager. A dialog box should open, see below. You should check to make sure that the “Convert NUnit Test Code” Recipe is present. Then click Close.

Step 7: You have completed the installation.

Step 8: Back-up the files and their associated project and solution files. This step is critically important. There is no automated restore of your files back to a previous state.

Step 9:Start Visual Studio 2005 Team System.

On the File menu, navigate to Open, click Project/Solution. Navigate to the directory where the Project/Solution is contained and then select the file you wish to open.

On the File menu, navigate to New, click Project. Select the appropriate (C# or VB.NET) test project and place it in the directory of your choosing. See “Tests must be in a Test Project” below.

Copy each file that contains NUnit test code that you want to be converted into the test project.

Add any required references (including a reference to NUnit) and compile the test project. Once the code compiles, run the tests in NUnit to ensure that you are starting with a working test harness. This step is optional but I like to do it because if the conversion process introduces problems, I know that I started with a working baseline.

Step 10: Now onto the conversion process. Right-Click the test project that you created in previous step in the Solution Explorer. Click the Convert NUnit Test Code. As each file is being converted it will be opened in Visual Studio. When the conversion is complete, the conversion results will be displayed.

Once you have completed the conversion process you should disable the guidance package. The reason is that every time you right-click in the Solution View the guidance package looks for NUnit test code. If there isn’t any to find it just wastes time. On the Tools menu, click Guidance Package Manager. A dialog box should open. Click Enable/Disable Packages. You should uncheck “NUnit Test Code Conversion”. Then click OK.

Saturday, July 15, 2006

Domain Driven Design vs Data Driven Design -- Domain Driven Design

Subscribe to my youtube channel "Software Architecture Matters!!!"

Survic has very nicely argued that database storage and retrieval is 80% ( I would say 70%) portion of application. Mocks ups, Screen Design and database will help to achieve completion of 80 percent of system.

I agree 100% with you that if you have a short term project with no future releases, data driven design is more efficient. My argument is Mock ups, Domain Design and Database Design (in that order. Domain Design and Database Design could be parallel activities in some organizations) will not only help achieve 100% but is also cost effective for project with multiple releases.

Rest of 20% is too important to left open to risks of communication failure

Nothing more make sense to Business User more than Mock ups, Screen Shots or prototype. You win the 80 percent battle if you have these ready with Database design. But left 20% is too important to left open to communication failure.

Rest 20% is like collaboration mechanism that integrates all the parts of plane and help you fly the plane and reach the destination. Fact that you all parts are complete and you get the mechanism wrong would result in system failure.

Now back to real world, we get the 20% right in the sense; they deliver the business value but are written in procedural style hard-coded in the front end. Thus it results in weak business layer. Experience developers will notice patterns and in next iteration, we will refactor the 20%. That brings me to next point

Domain Driven Design requires more time.

Domain Driven Design is a more reverse thought process. As you put it, first figure out the data needs and perform the domain design. Problem is thinking in terms of data needs will create communication failure. There is good case study example in Domain Driven Design ( I have read only 10% of book). Here is excerpt from book

Users:

So when we change the customs clearance point, we need to redo the whole routing plan.

Developer:

Right. We'll delete all the rows in the shipment table with that cargo id and we will pass the origin, destination, and the new customs clearance point into the Routing Service, and it will re-populate the table. We'll have to have a Boolean in the Cargo so we'll know there is data in the shipment table

Users:

Delete the rows. OK, whatever

Reference: Domain Driven Design -- by Eric Evans.

There is no concept of any domain analysis in most project that I have done. You have screen shots, process flow or activity diagrams and database ready, let us move to development. Class diagrams more reflect the database rather than domain.

So you are going to be get all entity classes right and no or light controller or process classes. Experienced developers will notice all common patterns but there is not time to apply patterns as there is a deadline to meet. In next release, experienced developers will sneak in design patterns, controllers and process classes. If something breaks down in application that was scoped not to be affected during impact analysis in release, you will to answer the Project Manager.

Domain Driven Design is good and no Domain Driven Design has costs

In my analysis, Domain Driven analysis will help you get 10 – 15% out of 20% right in first release. Returns on investment are higher in long term and less risk. Performing Domain Analysis first before data requirement will help the communication and no Domain Analysis has costs.

People understand Mock Screens More

In theory, perhaps, you could present a user with any view of a system, regardless of what lies beneath. But, in practice, a mismatch causes confusion at best—bugs at worst. Consider a simple example of how users are misled by superimposed model of bookmarks for Web sites in current releases of Microsoft Internet Explorer.

Of course, an unadorned view of the domain model would definitely not be convient for the user in most cases. But trying to create in the UI an illusion of a model other than the domain model will cause confusion unless the illusion is perfect.

People don’t understand Objects

MODEL_DRIVEN DESIGN calls for an implementation technology in tune with the particular modeling paradigm bend applied. At present, the dominant paradigm is object-oriented design and most complex projects these days set out to use objects, some are circumstantial, and others derive from the advantages that come from wide usage itself.

Many of reasons teams choose the object paradigm are not technological, or even intrinsic to objects. But right out of the gate, object modeling strike a nice balance of simplicity and sophistication.

The fundamentals of object oriented design seem to come naturally to most people. Although some developers miss the subtleties of modeling, even non technologists can follow a diagram of an object model.

Yet, simple as the concept of object modeling is, it has been rich enough to capture important domain knowledge. And it has been supported from the outset by development tools that allowed a model to be expressed in software.

Objects are already understood by a community of thousands of developers, project managers, and all other specialists involved in project work.

When a design is based on a model that reflects the basic concerns of users and domain experts, the bones of the design can be revealed to the user to a greater extent than with other design approaches. Revealing the model gives user more access ot the potential of the software and yields consistent, predictable behavior

Reference: Domain Driven Design – by Eric Evans

Monday, July 10, 2006

Data Driven Design to Domain Driven Design—Facilitator Fit -- Fit

After exploring Fit, I am convinced that Domain Driven Design is way to go.

Data Driven Design creates a communication gap between business user and programmer. Data driven design forces programmer to think more about persistence and data storage needs rather than problem domain. Programmer ends up throwing lot of technical mumbo jumbos at business user. This leads to communication failure.

When business user and programmer arrive at Fit table, next step is to map Fit table to Software’s Business Layer to validate the Fit Table. Mapping will be smoother if Business Layer Class Model mirrors the problem domain. Close mirroring will encourage the programmer to speak in business user’s language and facilitates good communication.

I was employing data driven design with pragmatic use of Design Pattern. Now I am going to focus on Domain Driven Design and speak in Business Users language.

For a start, I am going to read Domain Driven Design by Eric Evans

Data Driven Design creates a communication gap between business user and programmer. Data driven design forces programmer to think more about persistence and data storage needs rather than problem domain. Programmer ends up throwing lot of technical mumbo jumbos at business user. This leads to communication failure.

When business user and programmer arrive at Fit table, next step is to map Fit table to Software’s Business Layer to validate the Fit Table. Mapping will be smoother if Business Layer Class Model mirrors the problem domain. Close mirroring will encourage the programmer to speak in business user’s language and facilitates good communication.

I was employing data driven design with pragmatic use of Design Pattern. Now I am going to focus on Domain Driven Design and speak in Business Users language.

For a start, I am going to read Domain Driven Design by Eric Evans

Tuesday, July 04, 2006

Eight Point Checks for Servicing Software -- Servicing Software Part 3

I am updating my post part 2. I am replacing terms nunit with automated testing and nant with continuous integration build.

With the advent of Team System, I think that I am going to explore its automated unit testing and continuous integration capabilities. It was a hard decision since I really loved these open source frameworks. I think that they did a great service and have outlived its utility.

I am hoping that Team System has done a good job of integrating these frameworks with IDE.

Next couple of days I am going to Explore Team System capabilities

With the advent of Team System, I think that I am going to explore its automated unit testing and continuous integration capabilities. It was a hard decision since I really loved these open source frameworks. I think that they did a great service and have outlived its utility.

I am hoping that Team System has done a good job of integrating these frameworks with IDE.

Next couple of days I am going to Explore Team System capabilities

Subscribe to:

Posts (Atom)